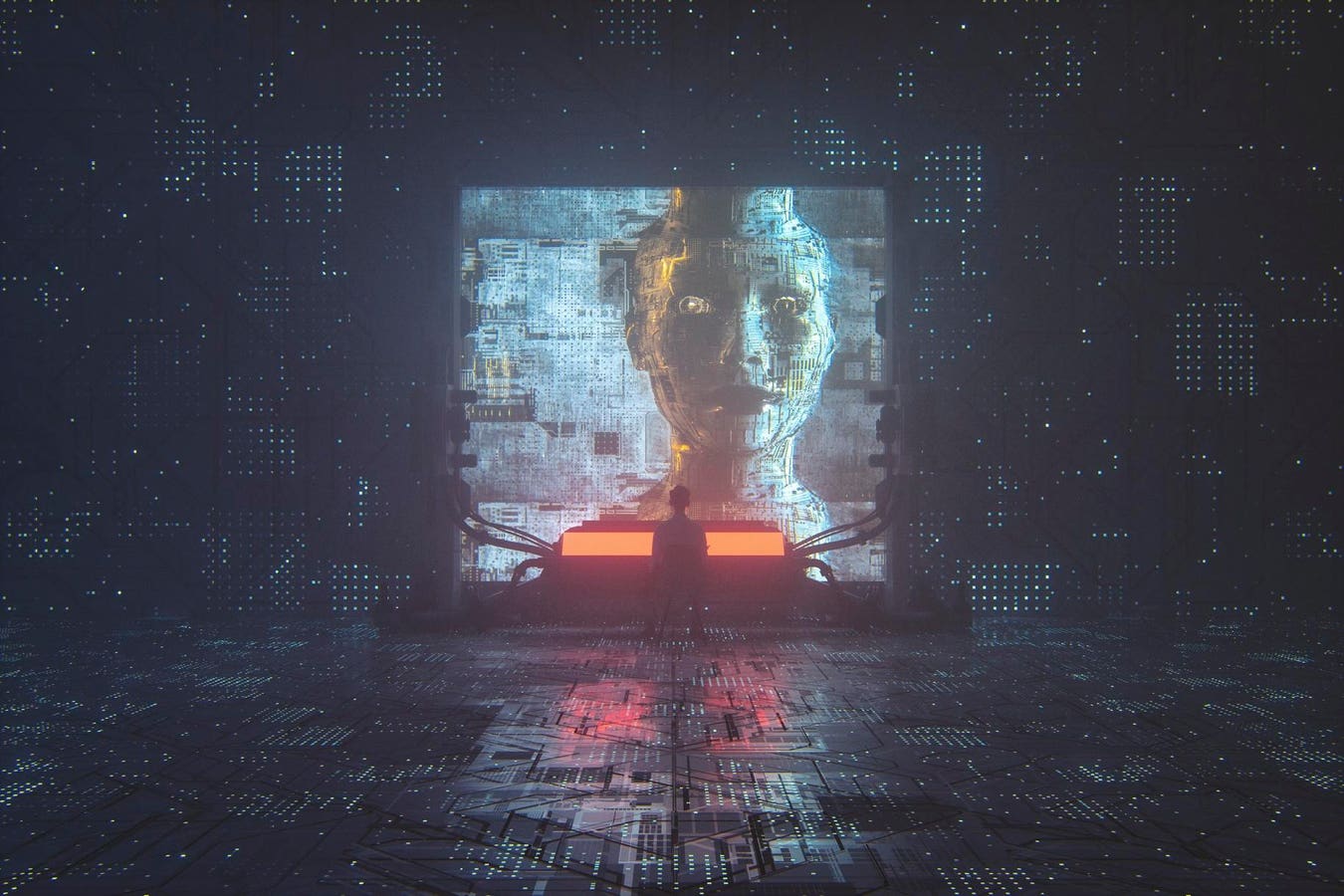

Emotional AI: Are Chatbots a Health Risk?

Editor’s Note: Concerns regarding the potential health risks associated with emotionally intelligent chatbots are rising. This article explores the key issues and offers insights into mitigating potential harm.

Why This Topic Matters

The rapid advancement of emotional AI (artificial intelligence capable of understanding and responding to human emotions) has led to the widespread adoption of emotionally intelligent chatbots across various sectors, from customer service to mental health support. While offering convenience and potential benefits, these advancements raise crucial questions about their impact on mental well-being. This article delves into the potential risks associated with emotionally intelligent chatbots, exploring how their design and application might inadvertently exacerbate existing mental health issues or even create new ones. Understanding these risks is crucial for developers, users, and policymakers to ensure responsible innovation and prevent potential harm. Key areas of concern include the potential for addiction, the blurring of lines between human and AI interaction, and the ethical implications of using AI for mental health support.

Key Takeaways

| Risk Category | Key Concern | Mitigation Strategy |

|---|---|---|

| Addiction | Over-reliance and dependence on chatbot interaction | Promote balanced use, encourage human interaction |

| Emotional Manipulation | Chatbots exploiting vulnerabilities for profit | Transparent design, ethical guidelines |

| Privacy Concerns | Data collection and potential misuse | Strong data protection measures, user consent |

| Misinformation | Spread of inaccurate or harmful information | Fact-checking mechanisms, responsible development |

| Misplaced Trust | Over-dependence on AI for emotional support | Clear communication of AI limitations |

Emotional AI: Are Chatbots a Health Risk?

Introduction: The rise of emotionally intelligent chatbots presents a fascinating paradox. These AI companions offer comfort and convenience, yet their very ability to mimic human empathy raises concerns about their potential to negatively impact mental health. We're exploring the emerging evidence and discussing the implications for individuals and society.

Key Aspects:

- Addiction Potential: The addictive nature of social media and online gaming has been well-documented. Emotionally intelligent chatbots, with their personalized responses and seemingly empathetic interactions, could exacerbate these addictive tendencies.

- Emotional Manipulation: Sophisticated chatbots can learn individual user preferences and exploit vulnerabilities. This raises ethical concerns about potential manipulation, particularly in vulnerable populations.

- Privacy and Data Security: The collection and use of personal data by chatbots raise serious privacy concerns. Sensitive information shared with these AI companions could be vulnerable to breaches or misuse.

- Misinformation and Bias: Chatbots trained on biased data can perpetuate harmful stereotypes and misinformation, negatively impacting users' mental and emotional states.

Detailed Analysis:

- Addiction: Studies have shown a correlation between excessive social media use and depression and anxiety. The constant availability and personalized nature of emotional AI chatbots could amplify these effects. The potential for escapism and avoidance of real-life problems is a major concern.

- Emotional Manipulation: Imagine a chatbot designed to sell a product subtly manipulating a user's emotions to increase purchasing likelihood. This highlights the ethical dilemmas inherent in emotionally intelligent AI. Regulations and ethical guidelines are crucial to prevent exploitation.

- Privacy and Data Security: The data collected by chatbots, including personal conversations and emotional responses, must be handled responsibly. Strong encryption, anonymization techniques, and transparent data policies are necessary to mitigate risks.

- Misinformation and Bias: If a chatbot is trained on biased data, it can reflect and even amplify those biases in its interactions. This could have serious consequences, particularly for marginalized groups.

Interactive Elements

The Role of Empathy in AI

Introduction: The very feature that makes emotional AI so compelling – its ability to simulate empathy – also presents a significant risk. Understanding the limitations of AI empathy is crucial.

Facets:

- Simulated vs. Genuine Empathy: Chatbots can mimic empathy but lack genuine understanding and emotional experience. Users need to be aware of this distinction.

- The Risk of Over-Reliance: Over-dependence on AI for emotional support can hinder the development of healthy coping mechanisms and real-life relationships.

- Ethical Considerations: The use of AI to provide emotional support requires careful consideration of ethical implications and potential harm.

Summary: While simulated empathy can be comforting, it's crucial to recognize its limitations and avoid replacing human interaction altogether.

Mitigating the Risks

Introduction: Addressing the potential health risks associated with emotional AI requires a multi-faceted approach involving developers, users, and policymakers.

Further Analysis:

- Transparency and Disclosure: Chatbot developers should be transparent about their AI's capabilities and limitations. Clear labeling and disclosure are essential.

- User Education: Users need to be educated about the potential risks and benefits of interacting with emotionally intelligent chatbots.

- Regulatory Frameworks: Governments and regulatory bodies need to develop frameworks to address the ethical and safety concerns surrounding emotional AI.

Closing: Responsible development and use of emotional AI is crucial to harness its benefits while mitigating its potential harms. Open dialogue and collaboration between stakeholders are key to ensuring a safe and ethical future for this technology.

People Also Ask (NLP-Friendly Answers)

Q1: What is Emotional AI?

A: Emotional AI refers to artificial intelligence systems capable of understanding, interpreting, and responding to human emotions.

Q2: Why is emotional AI in chatbots a concern?

A: Concerns arise from the potential for addiction, emotional manipulation, privacy breaches, misinformation, and the displacement of genuine human interaction.

Q3: How can emotional AI chatbots benefit me?

A: They can provide convenient customer service, offer personalized support, and even provide basic emotional support in certain contexts (though not a replacement for professional help).

Q4: What are the main challenges with emotional AI?

A: Main challenges include ensuring privacy, preventing manipulation, addressing bias in data, and mitigating the risk of addiction and over-reliance.

Q5: How to get started with using emotional AI responsibly?

A: Be mindful of your usage time, understand the AI's limitations, prioritize real-life human connections, and report any unethical behavior or manipulation.

Practical Tips for Using Emotional AI Safely

Introduction: These tips can help you navigate the world of emotional AI responsibly and minimize potential risks.

Tips:

- Limit your interaction time: Set boundaries for how long you interact with emotional AI chatbots daily.

- Be aware of potential manipulation: Approach interactions critically, recognizing that chatbots are programmed to influence behavior.

- Prioritize real-life relationships: Don't let AI replace meaningful human connections.

- Protect your privacy: Be cautious about the information you share with chatbots.

- Seek professional help when needed: Emotional AI chatbots are not a substitute for professional mental health services.

- Report unethical behavior: If you encounter manipulative or harmful behavior from a chatbot, report it to the developer.

- Stay informed: Keep up-to-date on the latest research and developments in emotional AI.

- Promote responsible development: Support companies and developers committed to ethical AI practices.

Summary: By following these tips, you can leverage the benefits of emotional AI while mitigating potential risks.

Transition: The rise of emotional AI presents both exciting opportunities and significant challenges. Let's conclude by reflecting on the future.

Summary (Zusammenfassung)

This article explored the emerging concerns surrounding the potential health risks associated with emotionally intelligent chatbots. We examined the risks of addiction, manipulation, privacy violations, and misinformation. The key takeaway is the need for responsible development, transparent design, and informed user engagement to harness the benefits of this technology while mitigating potential harm.

Closing Message (Schlussbotschaft)

The future of emotional AI is in our hands. By fostering open dialogue, promoting ethical development, and empowering users with knowledge, we can navigate the complex landscape of this powerful technology and ensure its benefits are realized while minimizing its risks. What steps will you take to promote responsible AI?

Call to Action (CTA)

Share this article to raise awareness about the potential risks of emotional AI. Subscribe to our newsletter for updates on this and other important AI-related topics!